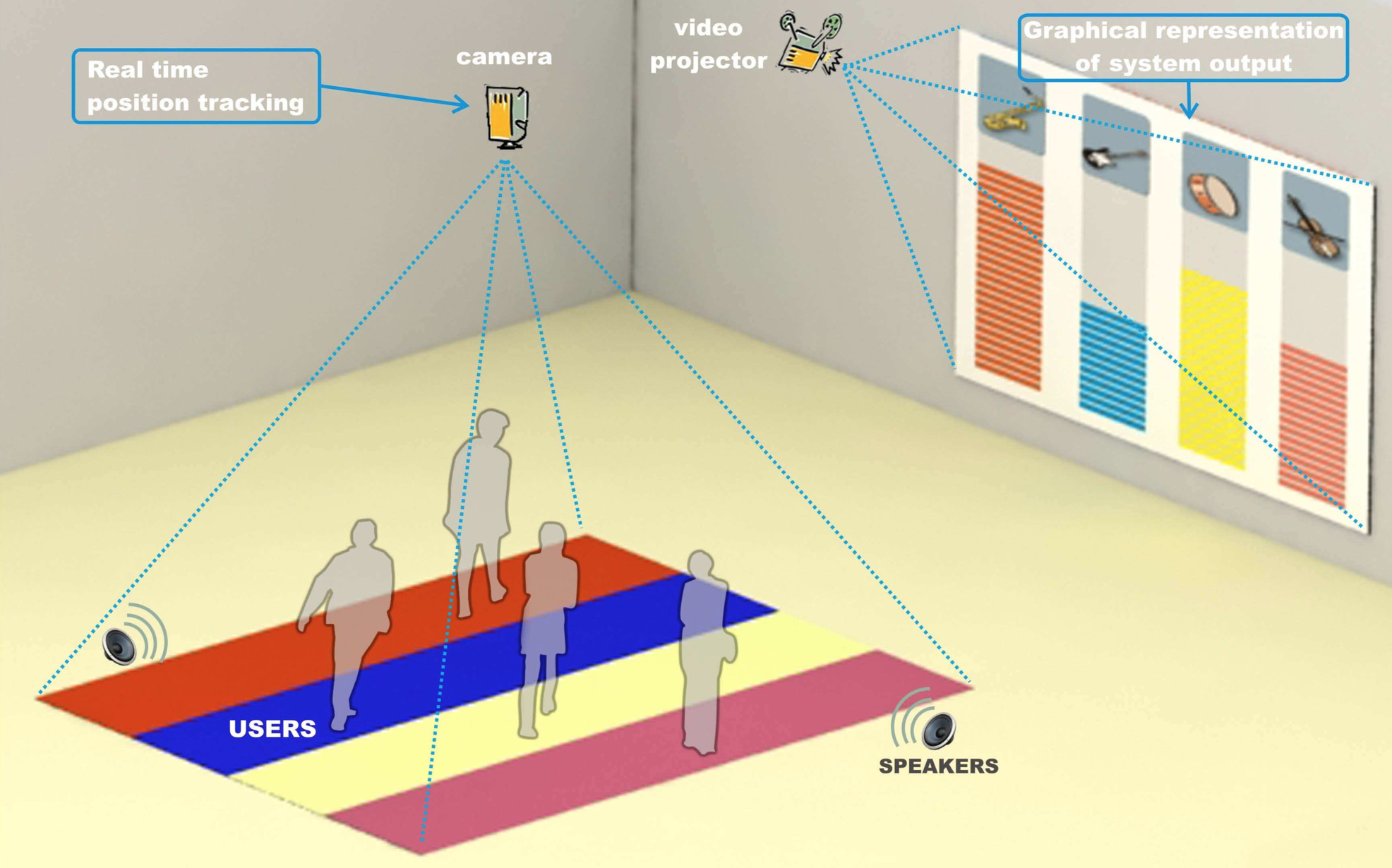

Body Music is a physically interactive system that extracts expressive motion features from the players full-body movements and gestures in a room setting. The values of these motion features are mapped both onto acoustic parameters for the real-time expressive rendering of a piece of music, and onto real-time generated visual feedback projected on a screen in front of the player.

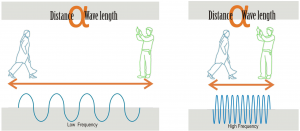

Users standing far apart creates a lower frequency sound, and as they move closer together the frequency raises. (higher pitch)

This intuitive way of exploring music provides a deeper sensitization of the nature of music itself, such as frequency, amplitude, tempo and time signature, and hence lowers the barriers in appreciating music. This can also inspire more people to learn music and music theory as well as develop newfangled approaches to music.

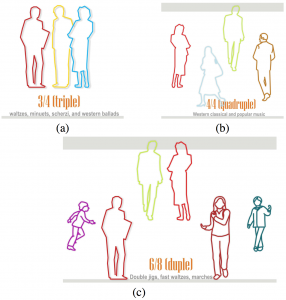

(a) Three people in the space triggers the melody to play in 3 / 4 time (b) Four people in the space triggers the melody to play in 4 / 4 time (c) Six people in the space triggers the melody to play in 6 / 8 time

This system was built using simple computer vision techniques and precedes the position/gesture sensing technologies that are prevalent today (Kinect, Leap Motion, etc.)

Khoo, E., Merritt, T., Fei, L.F., Liu, W., Rahaman, H., Prasad, J., Marsh, T. 2008. Body Music: Physical Exploration of Music Theory. In Proceedings of the 2008 ACM SIGGRAPH Symposium on Videogames (Los Angeles, California, August 10, 2008). Sandbox ’08. ACM, New York, NY, 35-42.